In today’s bandwidth-intensive world, latency is an important factor that can impact performance and the end-user experience for modern cloud-based applications. For many CTOs, architects, and decision-makers at growing small and medium sized businesses (SMBs), understanding and reducing latency is not just a technical need but also a strategic play.

Latency, or the time it takes for data to travel from one point to another, affects everything from how snappy or responsive your application may feel to content delivery speeds to media streaming. As infrastructure increasingly relies on cloud object storage to manage terabytes or even petabytes of data, optimizing latency can be the difference between success and failure.

Let’s get into the nuances of latency and its impact on cloud storage performance.

Upload vs. Download Latency: What’s the Difference?

In the world of cloud storage, you’ll typically encounter two forms of latency: upload latency and download latency. Each can impact the responsiveness and efficiency of your cloud-based application.

Upload Latency

Upload latency refers to the delay when data is sent from a client or user’s device to the cloud. Live streaming applications, backup solutions, or any application that relies heavily on real-time data uploading will experience hiccups if upload latency is high, leading to buffering delays or momentary stream interruptions.

Download Latency

Download latency, on the other hand, is the delay when retrieving data from the cloud to the client or end user’s device. Download latency is particularly relevant for content delivery applications, such as on demand video streaming platforms, e-commerce, or other web-based applications. Reducing download latency, creating a snappy web experience, and ensuring content is swiftly delivered to the end user will make for a more favorable user experience.

Ideally, you’ll want to optimize for latency in both directions, but, depending on your use case and the type of application you are building, it’s important to understand the nuances of upload and download latency and their impact on your end users.

Decoding Cloud Latency: Key Factors and Their Impact

When it comes to cloud storage, how good or bad the latency is can be influenced by a number of factors, each having an impact on the overall performance of your application. Let’s explore a few of these key factors.

Network Congestion

Like traffic on a freeway, packets of data can experience congestion on the internet. This can lead to slower data transmission speeds, especially during peak hours, leading to a laggy experience. Internet connection quality and the capacity of networks can also contribute to this congestion.

Geographical Distance

Often overlooked, the physical distance from the client or end user’s device to the cloud origin store can have an impact on latency. The farther the distance from the client to the server, the farther the data has to traverse and the longer it takes for transmission to complete, leading to higher latency.

Infrastructure Components

The quality of infrastructure, including routers, switches, and cables, may affect network performance and latency numbers. Modern hardware, such as fiber-optic cables, can reduce latency, unlike outdated systems that don’t meet current demands. Often, you don’t have full control over all of these infrastructure elements, but awareness of potential bottlenecks may be helpful, guiding upgrades wherever possible.

Technical Processes

- TCP/IP Handshake: Connecting a client and a server involves a handshake process, which may introduce a delay, especially if it’s a new connection.

- DNS Resolution: Latency can be increased by the time it takes to resolve a domain name to its IP address. There is a small reduction in total latency with faster DNS resolution times.

- Data routing: Data does not necessarily travel a straight line from its source to its destination. Latency can be influenced by the effectiveness of routing algorithms and the number of hops that data must make.

Reduced latency and improved application performance are important for businesses that rely on frequently accessing data stored in cloud storage. This may include selecting providers with strategically positioned data centers, fine-tuning network configurations, and understanding how internet infrastructure affects the latency of their applications.

Minimizing Latency With Content Delivery Networks (CDNs)

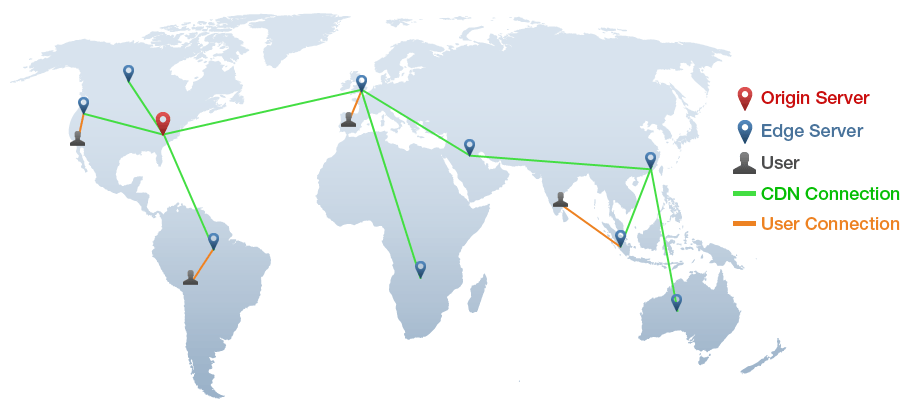

Further reducing latency in your application may be achieved by layering a content delivery network (CDN) in front of your origin storage. CDNs help reduce the time it takes for content to reach the end user by caching data in distributed servers that store content across multiple geographic locations. When your end-user requests or downloads content, the CDN delivers it from the nearest server, minimizing the distance the data has to travel, which significantly reduces latency.

Backblaze B2 Cloud Storage integrates with multiple CDN solutions, including Fastly, Bunny.net, and Cloudflare, providing a performance advantage. And, Backblaze offers the additional benefit of free egress between where the data is stored and the CDN’s edge servers. This not only reduces latency, but also optimizes bandwidth usage, making it cost effective for businesses building bandwidth intensive applications such as on demand media streaming.

To get slightly into the technical weeds, CDNs essentially cache content at the edge of the network, meaning that once content is stored on a CDN server, subsequent requests do not need to go back to the origin server to request data.

This reduces the load on the origin server and reduces the time needed to deliver the content to the user. For companies using cloud storage, integrating CDNs into their infrastructure is an effective configuration to improve the global availability of content, making it an important aspect of cloud storage and application performance optimization.

Case Study: Musify Improves Latency and Reduces Cloud Bill by 70%

To illustrate the impact of reduced latency on performance, consider the example of music streaming platform Musify. By moving from Amazon S3 to Backblaze B2 and leveraging the partnership with Cloudflare, Musify significantly improved its service offering. Musify egresses about 1PB of data per month, which, under traditional cloud storage pricing models, can lead to significant costs. Because Backblaze and Cloudflare are both members of the Bandwidth Alliance, Musify now has no data transfer costs, contributing to an estimated 70% reduction in cloud spend. And, thanks to the high cache hit ratio, 90% of the transfer takes place in the CDN layer, which helps maintain high performance, regardless of the location of the file or the user.

Latency Wrap Up

As we wrap up our look at the role latency plays in cloud-based applications, it’s clear that understanding and strategically reducing latency is a necessary approach for CTOs, architects, and decision-makers building many of the modern applications we all use today. There are several factors that impact upload and download latency, and it’s important to understand the nuances to effectively improve performance.

Additionally, Backblaze B2’s integrations with CDNs like Fastly, bunny.net, and Cloudflare offer a cost-effective way to improve performance and reduce latency. The strategic decisions Musify made demonstrate how reducing latency with a CDN can significantly improve content delivery while saving on egress costs, and reducing overall business OpEx.

For additional information and guidance on reducing latency, improving TTFB numbers and overall performance, the insights shared in “Cloud Performance and When It Matters” offer a deeper, technical look.

If you’re keen to explore further into how an object storage platform may support your needs and help scale your bandwidth-intensive applications, read more about Backblaze B2 Cloud Storage.