It’s easy to open a data center, right? All you have to do is connect a bunch of hard drives to power and the internet, find a building, and you’re off to the races.

Well, not exactly. Building and using one Storage Pod is quite a bit different than managing exabytes of data. As the world has grown more connected, the demand for data centers has grown—and then along comes artificial intelligence (AI), with processing and storage demands that amp up the need even more.

That, of course, has real-world impacts, and we’re here to chat about why. Today we’re going to talk about power, one of the single biggest costs to running a data center, how it has impacts far beyond a simple utility bill, and what role temperature plays in things.

How Much Power Does a Data Center Use?

There’s no “normal” when it comes to the total amount of power a data center will need, as data centers vary in size. Here are a few figures that can help us get us on the same page about scale:

- The largest data center market in the world is in Northern Virginia in the United States and it has a 2,552 megawatt capacity. (By the way: 1MW = 1 million watts. For context, one megawatt is enough energy to power about 200 American homes.)

- In 2023, data centers consumed 7.4 gigawatts of power. (1GW = 1 billion watts.)

- That’s approximately 1–1.3% of total power in the world. Expand that to all IT energy demand, and that number is about 10% of global energy consumption.

The goal of a data center is to be always online. That means that there are redundant systems of power—so, what comes in from the grid as well as generators and high-tech battery systems like uninterruptible power supplies (UPS)—running 24 hours a day to keep servers storing and processing data and connected to networks. In order to keep all that equipment running well, they need to stay in a healthy temperature (and humidity) range, which sounds much, much simpler than it is.

Measuring Power Usage

One of the most popular metrics for tracking power efficiency in data centers is power usage effectiveness (PUE), which is the ratio of the total amount of energy used by a data center to the energy delivered to computing equipment.

Note that this metric divides power usage into two main categories: what you spend keeping devices online (which we’ll call “IT load” for shorthand purposes), and “overhead”, which is largely comprised of the power dedicated to cooling your data center down.

There are valid criticisms of the metric, including that improvements to IT load will actually make your metric worse: You’re being more efficient about IT power, but your overhead stays the same—so less efficiency even though you’re using less power overall. Still, it gives companies a repeatable way to measure against themselves and others over time, including directly comparing seasons year to year, so it’s a widely adopted metric.

Calculating your IT load is a relatively predictable number. Manufacturers tell you the wattage of your device (or you can calculate it based on your device’s specs), then you take that number and plan for it being always online. The sum of all your devices running 24 hours a day is your IT power spend.

Comparatively, doing the same for cooling is a bit more complicated—and it accounts for approximately 40% of power usage.

What Increases Temperature in a Data Center?

Any time you’re using power, you’re creating heat. So the first thing you consider is always your IT load. You don’t want your servers overtaxed—most folks agree that you want to run at about 80% of capacity to keep things kosher—but you also don’t want to have a bunch of servers sitting around idle when you return to off-peak usage. Even at rest, they’re still consuming power.

So, the methodology around temperature mitigation always starts at power reduction—which means that growth, IT efficiencies, right-sizing for your capacity, and even device provisioning are an inextricable part of the conversation. And, you create more heat when you’re asking an electrical component to work harder—so, more processing for things like AI tasks means more power and more heat.

And, there are a number of other things that can compound or create heat: the types of drives or processors in the servers, the layout of the servers within the data center, people, lights, and the ambient temperature just on the other side of the data center walls.

Brief reminder that servers look like this:

When you’re building a server, fundamentally what you’re doing is shoving a bunch of electrical components in a box. Yes, there are design choices about those boxes that help mitigate temperature, but just like a smaller room heating up more quickly than a warehouse, you are containing and concentrating a heat source.

We humans generate heat and need lights to see, so the folks who work in data centers have to be taken into account when considering the overall temperature of the data center. Check out these formulas or this nifty calculator for rough numbers (with the caveat that you should always consult an expert and monitor your systems when you’re talking about real data centers):

- Heat produced by people = maximum number of people in the facility at one time x 100

- Heat output of lighting = 2.0 x floor area in square feet or 21.53 x floor area in square meters

Also, your data center exists in the real world, and we haven’t (yet) learned to control the weather—so you also have to factor in fighting the external temperature when you’re bringing things back to ideal conditions. That’s led to a movement towards building data centers in new locations. It’s important to note that there are other reasons you might not want to move, however, including network infrastructure.

Accounting for people and the real world also means that there will be peak usage times, which is to say that even in a global economy, there are times when more people are asking to use their data (and their dryers, so if you’re reliant on a consumer power grid, you’ll also see the price of power spike). Aside from the cost, more people using their data = more processing = more power.

How Is Temperature Mitigated in Data Centers?

Cooling down your data center with fans, air conditioners, and water also uses power (and generates heat). Different methods of cooling use different amounts of power—water cooling in server doors vs. traditional high-capacity air conditioners, for example.

Talking about real numbers here gets a bit tricky. Data centers aren’t a standard size. As data centers get larger, the environment gets more complex, expanding the potential types of problems, while also increasing the net benefit of changes that might not have a visible impact in smaller data centers. It’s like any economy of scale: The field of “what is possible” is wider; rewards are bigger, and the relationship between change vs. impact is not linear. Studies have shown that creating larger data centers creates all sorts of benefits (which is an article in and of itself), and one of those specific benefits is greater power efficiency.

Most folks talk about the impact of different cooling technologies in a comparative way, i.e., we saw a 30% reduction in heat. And, many of the methods of mitigating temperature are about preventing the need to use power in the first place. For that reason, it’s arguably more useful to think about the total power usage of the system. In that context, it’s useful to know that a single fan takes x amount of power and produces x amount of heat, but it’s more useful to think of them in relation to the net change on the overall temperature bottom line. With that in mind, let’s talk about some tactics data centers use to reduce temperature.

Customizing and Monitoring the Facility

One of the best ways to keep temperature regulated in your data center is to never let it get hotter than it needs to be in the first place, and every choice you make contributes to that overall total. For example, when you’re talking about adding or removing servers from your pool, that reduces your IT power consumption and affects temperature.

There are a whole host of things that come down to data centers being a purpose-built space, and most of them have to do with ensuring healthy airflow based on the system you’ve designed to move hot air out and cold air in.

No matter what tactics you’re using, monitoring your data center environment is essential to keeping your system healthy. Some devices in your environment will come with internal indicators, like SMART stats on drives, and, of course, folks also set up sensors that connect to a central monitoring system. Even if you’ve designed a “perfect” system in theory, things change over time, whether you’re accounting for adding new capacity or just dealing with good old entropy.

Here’s a non-inclusive list of some of ways data centers customize their environments:

- Raised Floors: This allows airflow or liquid cooling under the server rack in addition to the top, bottom, and sides.

- Containment, or Hot and Cold Rows: The strategy here is to keep the hot side of your servers facing each other and the cold parts facing outward. That means that you can create a cyclical air flow with the exhaust strategically pulling hot air out of hot space, cooling it, then pushing the cold air over the servers.

- Calibrated Vector Cooling: Basically, concentrated active cooling measures in areas you know are going to be hotter. This allows you to use fewer resources by cooling at the source of the heat instead of generally cooling the room.

- Cable Management: Keeping cords organized isn’t just pretty, it also makes sure you’re not restricting airflow.

- Blanking Panels: This is a fancy way of saying that you should plug up the holes between devices.

Air vs. Liquid-Based Cooling

Why not both? Most data centers end up using a combination of air and water based cooling at different points in the overall environment. And, other liquids have led to some very exciting innovations. Let’s go into a bit more detail.

Air-Based Cooling

Air based cooling is all about understanding air flow and using that knowledge to extract hot air and move cold air over your servers.

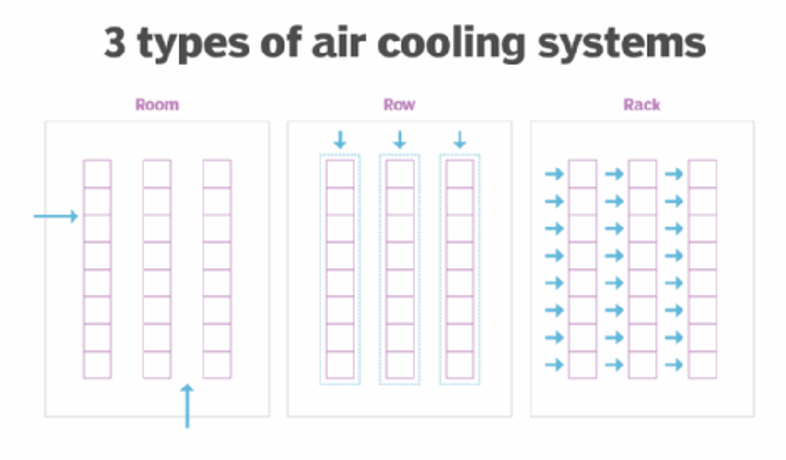

Air-based cooling is good up to a certain temperature threshold—about 20 kilowatts (kW) per rack. Newer hardware can easily reach 30kw or higher, and high processing workloads can take that even higher. That said, air-based cooling has benefitted by becoming more targeted, and people talk about building strategies based on room, row, or rack.

Water-Based Cooling

From here, it’s actually a pretty easy jump into water-based cooling. Water and other liquids are much better at transferring heat than air, about 50 to 1,000 times more, depending on the liquid you’re talking about. And, lots of traditional “air” cooling methods run warm air through a compressor (like in an air conditioner), which stores cold water and cools off the air, recirculating it into the data center. So, one fairly direct combination of this is the evaporative cooling tower:

Obviously water and electricity don’t naturally blend well, and one of the main concerns of using this method is leakage. Over time, folks have come up with some good, safe methods, designed around effectively containing the liquid. This increases the up-front cost, but has big payoffs for temperature mitigation. You find this methodology in rear door heat exchangers, which create a heat exchanger in—you guessed it—the rear door of a server, and direct-to-chip cooling, which contains the liquid into a plate, then embeds that plate directly in the hardware component.

So, we’ve got a piece of hardware, a server rack—the next step is the full data center turning itself into a heat exchange, and that’s when you get Nautilus—a data center built over a body of water.

(Other) Liquid-Based Cooling, or Immersion Cooling

With the same sort of daring thought process of the people who said, “I bet we can fly if we jump off this cliff with some wings,” somewhere along the way, someone said, “It would cool down a lot faster if we just dunked it in liquid.” Liquid-based cooling utilizes dielectric liquids, which can safely come in contact with electrical components. Single phase immersion uses fluids that don’t boil or undergo a phase change (think: similar to an oil), while two phase immersion uses liquids that boil at low temperatures, which releases heat by converting to a gas.

You’ll see components being cooled this way either in enclosed chassis, which can be used in rack-style environments, in open baths, which require specialized equipment, or a hybrid approach.

How Necessary Is This?

Let’s bring it back: we’re talking about all those technologies efficiently removing heat from a system because hotter environments break devices, which leads to downtime. And, we want to use efficient methods to remove heat because it means we can ask our devices to work harder without having to spend electricity to do it.

Recently, folks have started to question exactly how cool data centers need to be. Even allowing a few more degrees of tolerance can make a huge difference to how much time and money you spend on cooling. Whether it has longer term effects on the device performance is questionable—manufacturers are fairly opaque about data around how these standards are set, though exceeding recommended temperatures can have other impacts, like voiding device warranties.

Power, Infrastructure, Growth, and Sustainability

But the simple question of “Is it necessary?” is definitely answered “yes,” because power isn’t infinite. And, all this matters because improving power usage has a direct impact on both cost and long-term sustainability. According to a recent MIT article, the data centers now have a greater carbon footprint than the airline industry, and a single data center can consume the same amount of energy as 50,000 homes.

Let’s contextualize that last number, because it’s a tad controversial. The MIT research paper in question was published in 2022, and that last number is cited from “A Prehistory of the Cloud” by Tung-Hui Hu, published in 2006. Beyond just the sheer growth in the industry since 2006, data centers are notoriously reticent about publishing specific numbers when it comes to these metrics—Google didn’t release numbers until 2011, and they were founded in 1998.

Based on our 1MW = 200 homes metric the number from the MIT article number represents 250MW. One of the largest data centers in the world has a 650MW capacity. So, while you can take that MIT number with a grain of salt, you should also pay attention to market reports like this one—the aggregate numbers clearly show that power availability and consumption is one of the biggest concerns for future growth.

So, we have less-than-ideal reporting and numbers, and well-understood environmental impacts of creating electricity, and that brings us to the complicated relationship between the two factors. Costs of power have gone up significantly, and are fairly volatile when you’re talking about non-renewable energy sources. International agencies report that renewable energy sources are now the cheapest form of energy worldwide, but the challenge is integrating renewables into existing grids. While the U.S. power grid is reliable (and the U.S. accounts for half of the world’s hyperscale data center capacity), the Energy Department recently announced that the network of transmission lines may need to expand by more than two-thirds to carry that data nationwide—and invested $1.3 billion to make that happen.

What’s Next?

It’s easy to say, “It’s important that data centers stay online,” as we sort of glossed over above, but the true importance becomes clear when you consider what that data does—it keeps planes in the air, hospitals online, and so many other vital functions. Downtime is not an option, which leads us full circle to our introduction.

We (that is, we, humans) are only going to build more data centers. Incremental savings in power have high impact—just take a look at Google’s demand response initiative, which “shift[s] compute tasks and their associated energy consumption to the times and places where carbon-free energy is available on the grid.”

It’s definitely out of scope for this article to talk about the efficiencies of different types of energy sources. That kind of inefficiency doesn’t directly impact a data center, but it certainly has downstream effects in power availability—and it’s probably one reason why Microsoft, considering both its growth in power need and those realities, decided to set up a team dedicated to building nuclear power plants to directly power some of their data centers and then dropped $650 million to acquire a nuclear-powered data center campus.

Which is all to say: this is an exciting time for innovation in the cloud, and many of the opportunities are happening below the surface, so to speak. Understanding how the fundamental principles of physics and compute work—now more than ever—is a great place to start thinking about what the future holds and how it will impact our world, technologically, environmentally, and otherwise. And, data centers sit at the center of that “hot” debate.