Editor’s Note

This post has been updated since it was originally published in 2017.

Programs, processes, and threads are all terms that relate to software execution, but you may not know what they really mean. Whether you’re a seasoned developer, an aspiring enthusiast, or you’re just wondering what you’re looking at when you open Task Manager on a PC or Activity Monitor on a Mac, learning these terms is essential for understanding how a computer works.

This post explains the technical concepts behind computer programs, processes, and threads to give you a better understanding of the functionality of your digital devices. With this knowledge, you can quickly diagnose problems and come up with solutions, like knowing if you need to install more memory for better performance. If you care about having a fast, efficient computer, it is worth taking the time to understand these key terms.

What Is a Computer Program?

A program is a sequence of coded commands that tells a computer to perform a given task. There are many types of programs, including programs built into the operating system (OS) and ones to complete specific tasks. Generally, task-specific programs are called applications (or apps). For example, you are probably reading this post using a web browser application like Google Chrome, Mozilla Firefox, or Apple Safari. Other common applications include email clients, word processors, and games.

The process of creating a computer program involves designing algorithms, writing code in a programming language, and then compiling or interpreting that code to transform it into machine-readable instructions that the computer can execute.

What Are Programming Languages?

Programming languages are the way that humans and computers talk to each other. They are formalized sets of rules and syntax.

Compiled vs. Interpreted Programs

Many programs are written in a compiled language and created using programming languages like C, C++, C#. The end result is a text file of code that is compiled into binary form in order to run on the computer (more on binary form in a few paragraphs). The text file speaks directly to your computer. While they’re typically fast, they are also fixed compared to interpreted programs. That has positives and negatives: you have more control over things like memory management, but you’re platform dependent and, if you have to change something in your code, it typically takes longer to build and test.

There is another kind of program called an interpreted program. They require an additional program to take your program instructions and translate that to code for your computer. Compared with compiled languages, these types of programs are platform-independent (you just have to find a different interpreter, instead of writing a whole new program) and they typically take up less space. Some of the most common interpreted programming languages are Python, PHP, JavaScript, and Ruby.

Ultimately, both kinds of programs are run and loaded into memory in binary form. Programs have to run in binary because your computer’s CPU understands only binary instructions.

What Is Binary Code?

Binary is the native language of computers. At their most basic level, computers use only two states of electrical current—on and off. The on state is represented by 1 and the off state is represented by 0. Binary is different from the number system—base 10—that we use in daily life. In base 10, each digit position can be anything from 0 to 9. In the binary system, also known as base 2, each position is either a 0 or a 1.

Perhaps you’ve heard the programmer’s joke, “There are only 10 types of people in the world, those who understand binary, and those who don’t.”

How Are Computer Programs Stored and Run?

Programs are typically stored on a disk or in nonvolatile memory in executable format. Let’s break that down to understand why.

In this context, we’ll talk about your computer having two types of memory: volatile and nonvolatile. Volatile memory is temporary and processes in real time. It’s faster, easily accessible, and increases the efficiency of your computer. However, it’s not permanent. When your computer turns off, this type of memory resets.

Nonvolatile memory, on the other hand, is permanent unless deleted. While it’s slower to access, it can store more information. So, that makes it a better place to store programs. A file in an executable format is simply one that runs a program. It can be run directly by your CPU (that’s your processor). Examples of these file types are .exe in Windows and .app in Mac.

What Resources Does a Program Need to Run?

Once a program has been loaded into memory in binary form, what happens next?

Your executing program needs resources from the OS and memory to run. Without these resources, you can’t use the program. Fortunately, your OS manages the work of allocating resources to your programs automatically. Whether you use Microsoft Windows, macOS, Linux, Android, or something else, your OS is always hard at work directing your computer’s resources needed to turn your program into a running process.

In addition to OS and memory resources, there are a few essential resources that every program needs.

- Register. Think of a register as a holding pen that contains data that may be needed by a process like instructions, storage addresses, or other data.

- Program counter. Also known as an instruction pointer, the program counter plays an organizational role. It keeps track of where a computer is in its program sequence.

- Stack. A stack is a data structure that stores information about the active subroutines of a computer program. It is used as scratch space for the process. It is distinguished from dynamically allocated memory for the process that is known as the “heap.”

What Is a Computer Process?

When a program is loaded into memory along with all the resources it needs to operate, it is called a process. You might have multiple instances of a single program. In that situation, each instance of that running program is a process.

Each process has a separate memory address space. That separate memory address is helpful because it means that a process runs independently and is isolated from other processes. However, processes cannot directly access shared data in other processes. Switching from one process to another requires some amount of time (relatively speaking) for saving and loading registers, memory maps, and other resources.

Having independent processes matters for users because it means one process won’t corrupt or wreak havoc on other processes. If a single process has a problem, you can close that program and keep using your computer. Practically, that means you can end a malfunctioning program and keep working with minimal disruptions.

What Are Threads?

The final piece of the puzzle is threads. A thread is the unit of execution within a process.

When a process starts, it receives an assignment of memory and other computing resources. Each thread in the process shares that memory and resources. With single-threaded processes, the process contains one thread.

In multi-threaded processes, the process contains more than one thread, and the process is accomplishing a number of things at the same time (to be more accurate, we should say “virtually” the same time—you can read more about that in the section below on concurrency).

Earlier, we talked about the stack and the heap, the two kinds of memory available to a thread or process. Distinguishing between these kinds of memory matters because each thread will have its own stack. However, all the threads in a process will share the heap.

Some people call threads lightweight processes because they have their own stack but can access shared data. Since threads share the same address space as the process and other threads within the process, it is easy to communicate between the threads. The disadvantage is that one malfunctioning thread in a process can impact the viability of the process itself.

How Threads and Processes Work Step By Step

Here’s what happens when you open an application on your computer.

- The program starts out as a text file of programming code.

- The program is compiled or interpreted into binary form.

- The program is loaded into memory.

- The program becomes one or more running processes. Processes are typically independent of one another.

- Threads exist as the subset of a process.

- Threads can communicate with each other more easily than processes can.

- Threads are more vulnerable to problems caused by other threads in the same process.

Computer Process vs. Threads

| Aspect | Processes | Threads |

|---|---|---|

| Definition | Independent programs with their own memory space. | Lightweight, smaller units of a process, share memory. |

| Creation Overhead | Higher overhead due to separate memory space. | Lower overhead as they share the same memory space. |

| Isolation | Processes are isolated from each other. | Threads share the same memory space. |

| Resource Allocation | Each process has its own set of system resources. | Threads share resources within the same process. |

| Independence | Processes are more independent of each other. | Threads are dependent on each other within a process. |

| Failure Impact | A failure in one process does not directly affect others. | A failure in one thread can affect others in the same process. |

| Sychronization | Less need from the sychronization, as processes are isolated. | Requires careful synchronization due to shared resources. |

| Example Use Cases | Running multiple independent applications. | Multithreading within a single application for parallelism. |

| Memory Usage | Typically consumes more memory. | Consumes less memory compared to processes. |

What About Concurrency and Parallelism?

A question you might ask is whether processes or threads can run at the same time. The answer is: it depends. In environments with multiple processors or CPU cores, simultaneous execution of multiple processes or threads is feasible. However, on a single processor system, true simultaneous execution isn’t possible. In these cases, a process scheduling algorithm is employed to share the CPU among running processes or threads, creating the illusion of parallel execution. Each task is allocated a “time slice,” and the swift switching between tasks occurs seamlessly, typically imperceptible to users. The terms “parallelism” (denoting genuine simultaneous execution) and “concurrency” (indicating the interleaving of processes over time to simulate simultaneous execution) distinguish between the two modes of operation, whether truly simultaneous or approximated.

How Google Chrome Uses Processes and Threads

To illustrate the impact of processes and threads, let’s consider a real-world example with a program that many of us use, Google Chrome.

When Google designed the Chrome browser, they faced several important decisions. For instance, how should Chrome handle the fact that many different tasks often happen at the same time when using a browser? Every browser window (or tab) may communicate with several servers on the internet to download audio, video, text, and other resources. In addition, many users have 10 to 20 browser tabs (or more…) open most of the time, and each of these tabs may perform multiple tasks.

Google had to decide how to handle all of these tasks. They chose to run each browser window in Chrome as a separate process rather than a thread or many threads. That approach brought several benefits.

- Running each window as a process protects the overall application from bugs and glitches.

- Isolating a JavaScript program in a process prevents it from using too much CPU time and memory and making the entire browser unresponsive.

That said, there is a trade-off cost to Google’s design decision. Starting a new process for each browser window has a higher fixed cost in memory and resources compared to using threads. They were betting that their approach would end up with less memory bloat overall.

Using processes instead of threads provides better memory usage when memory is low. In practice, an inactive browser window is treated as a lower priority. That means the operating system may swap it to disk when memory is needed for other processes. If the windows were threaded, it would be more difficult to allocate memory efficiently which ultimately leads to lost computer performance.

For more insights on Google’s design decisions for Chrome on Google’s Chromium Blog or on the Chrome Introduction Comic.

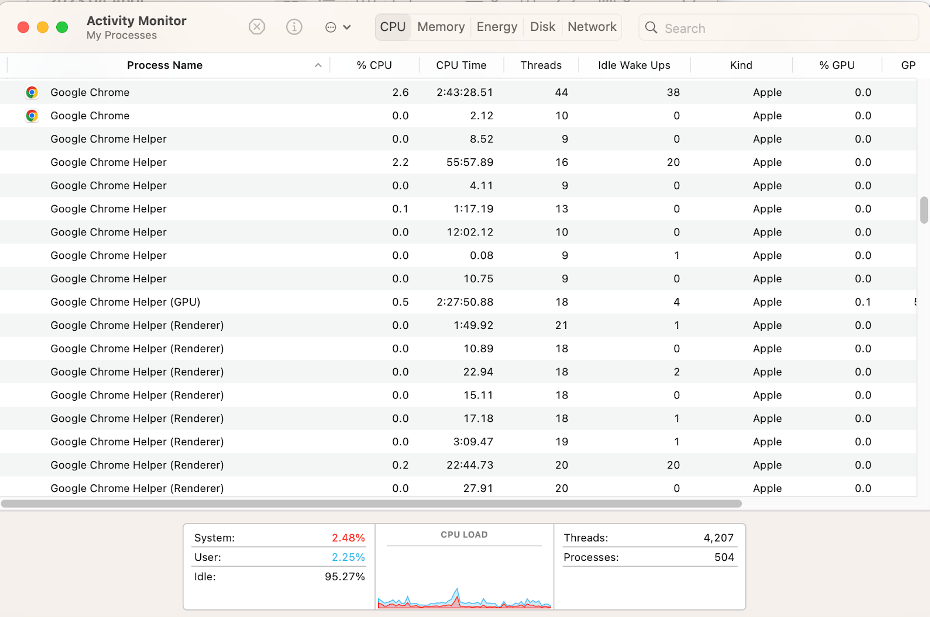

The screen capture below shows the Google Chrome processes running on a MacBook Air with many tabs open. You can see that some Chrome processes are using a fair amount of CPU time and resources (e.g., the one at the top is using 44 threads) while others are using fewer.

The Activity Monitor on the Mac (or Task Manager in Windows) on your system can be a valuable ally in fine-tuning your computer or troubleshooting problems. If your computer is running slowly or a program or browser window isn’t responding for a while, you can check its status using the system monitor.

In some cases, you’ll see a process marked as “Not Responding.” Try quitting that process and see if your system runs better. If an application is a memory hog, you might consider choosing a different application that will accomplish the same task.

Made It This Far?

We hope this Tron-like dive into the fascinating world of computer programs, processes, and threads has cleared up some questions.

At the start, we promised clarity on using these terms to improve performance. You can use Activity Monitor on the Mac or Task Manager on Windows to close applications and processes that are malfunctioning. That’s beneficial because it means you can end a malfunctioning program without the hassle of turning off your computer.

Still have questions? We’d love to hear from you in the comments.

FAQ

Computer programs are sets of coded instructions written in programming languages to direct computers in performing specific tasks or functions. Ranging from simple scripts to complex applications, computer programs enable users to interact with and leverage the capabilities of computing devices.

Computer processes are instances of executing computer programs. They represent the active state of a running application or task. Each process operates independently, with its own memory space and system resources, ensuring isolation from other processes. Processes are managed by the operating system, and they facilitate multitasking and parallel execution.

Computer threads are smaller units within computer processes, enabling parallel execution of tasks. Threads share the same memory space and resources within a process, allowing for more efficient communication and coordination. Unlike processes, threads operate in a cooperative manner, sharing data and context, making them suitable for tasks requiring simultaneous execution.

Computer processes are independent program instances with their own memory space and resources, operating in isolation. In contrast, threads are smaller units within processes that share the same memory, making communication easier but requiring careful synchronization. Processes are more independent, while threads enable concurrent execution and resource sharing within a process. The choice depends on the application’s requirements, balancing isolation with the benefits of parallelism and resource efficiency.

Concurrency involves the execution of multiple tasks during overlapping time periods, enhancing system responsiveness. It doesn’t necessarily imply true simultaneous execution but rather the interleaving of processes to create an appearance of parallelism. Parallel processing, on the other hand, refers to the simultaneous execution of multiple tasks using multiple processors or cores, achieving genuine parallelism. Concurrency emphasizes efficient task management, while parallel processing focuses on concurrent tasks executing simultaneously for improved performance in tasks that can be divided into independent subtasks.